Web scrapping is a technique by which a computer program automatically extracts information from a web page.

Python Web Scraping Tutorial. PDF Version Quick Guide Resources Job Search Discussion. Web scraping, also called web data mining or web harvesting, is the process of constructing an agent which can extract, parse, download and organize useful information from the web automatically. Mar 15, 2021 What is Web Scraping in Python? Some websites offer data sets that are downloadable in CSV format, or accessible via an Application Programming Interface (API). But many websites with useful data don’t offer these convenient options. Consider, for example, the National Weather Service’s website. Nov 25, 2020 Web Scraping Example: Scraping Flipkart Website. Pre-requisites: Python 2.x or Python 3.x with Selenium, BeautifulSoup, pandas libraries installed; Google-chrome browser; Ubuntu Operating System. With the example in this tutorial, you learned web scraping basics with the Beautiful Soup Python library. For your convenience, the above Python code is compiled together in this GitHub repo. Even though the real-world situation is often more complicated, you’ve got a good foundation to explore yourself!

Web Scraping With Selenium Python

Scraping involves the following sequence of steps: Tekken 3 install download pc.

- Send a HTTP request to get the web page

- Parse the response to create a structured HTML object

- Search and extract the required data from the HTML object

Python script for web scrapping

The rest of this article will guide you through creating a simple Python script for scraping data from a website. This script extracts the news headlines from Google News website.

Pre-requisites

1. Python

Obviously, you need to have Python. If you don't already have it, then download and install the latest version for your Operating System from here

2. Lxml

lxml is a library for processing XML and HTML easily. It is a Pythonic binding for libxml2 and lbxslt thereby combining the power of these two libraries with the simplicity of a Python API. To install lxml, the best way is to use pip package management tool. Run the command

pip install lxmlIf the installation fails with an error message that ends like ..failed with error code 1 the most likely reason is you may not have the necessary development packages, in which case run the following command:

set STATICBUILD=true && pip install lxml

3. Requests

Requests is a library for sending HTTP requests. Just like Lxml, you can install Requests using pip

pip install requests

Program Flow

Step 1: The first step in the program is to send a HTTP request and get the entire page contents to an object named response.

Step 2: In the next step the status code of response object is checked to see if the request succeeded or not.

Step 3: The response text is then parsed to form a tree structure

Step 4: Inspect the page elements using Developer Tools in your browser and identify the path to HTML element that contains the data. For example in the figure below, assume the data you need to extract is titles such as 'Jeremy Corbyn's seven U-turns ahead of Labour conference speech - live' then the path for such elements will be:

//h2[@class='esc-lead-article-title']/a/span[@class='titletext']/text() Step 5: The element path identified in the previous step is passed to the xpath function which returns a list containing all such elements in the page.

Step 6: Finally you print the list items separated by a new line.

Program source code

Sample Output

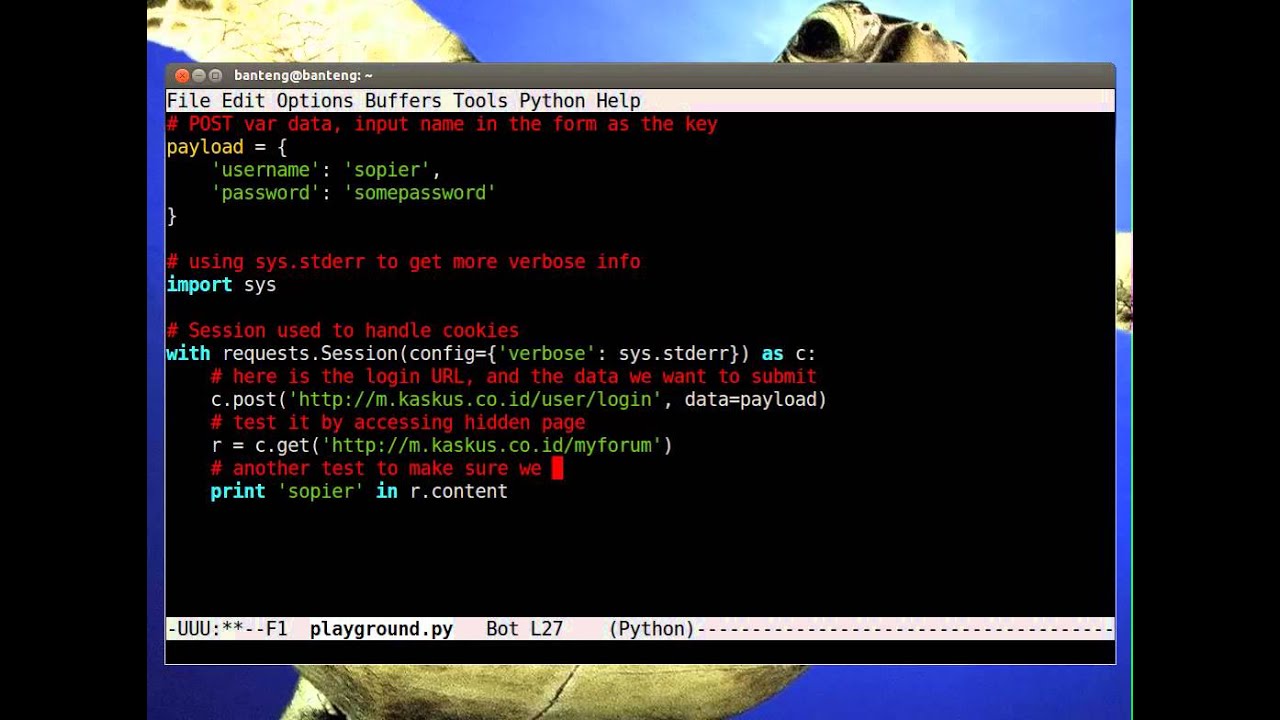

I’ve recently had to perform some web scraping from a site that required login.It wasn’t very straight forward as I expected so I’ve decided to write a tutorial for it.

For this tutorial we will scrape a list of projects from our bitbucket account.

The code from this tutorial can be found on my Github.

We will perform the following steps:

- Extract the details that we need for the login

- Perform login to the site

- Scrape the required data

For this tutorial, I’ve used the following packages (can be found in the requirements.txt):

Open the login page

Go to the following page “bitbucket.org/account/signin” .You will see the following page (perform logout in case you’re already logged in)

Check the details that we need to extract in order to login

In this section we will build a dictionary that will hold our details for performing login:

- Right click on the “Username or email” field and select “inspect element”. We will use the value of the “name” attribue for this input which is “username”. “username” will be the key and our user name / email will be the value (on other sites this might be “email”, “user_name”, “login”, etc.).

- Right click on the “Password” field and select “inspect element”. In the script we will need to use the value of the “name” attribue for this input which is “password”. “password” will be the key in the dictionary and our password will be the value (on other sites this might be “user_password”, “login_password”, “pwd”, etc.).

- In the page source, search for a hidden input tag called “csrfmiddlewaretoken”. “csrfmiddlewaretoken” will be the key and value will be the hidden input value (on other sites this might be a hidden input with the name “csrf_token”, “authentication_token”, etc.). For example “Vy00PE3Ra6aISwKBrPn72SFml00IcUV8”.

We will end up with a dict that will look like this: Microsoft visio 2013 license.

Keep in mind that this is the specific case for this site. While this login form is simple, other sites might require us to check the request log of the browser and find the relevant keys and values that we should use for the login step.

For this script we will only need to import the following:

First, we would like to create our session object. This object will allow us to persist the login session across all our requests.

Second, we would like to extract the csrf token from the web page, this token is used during login.For this example we are using lxml and xpath, we could have used regular expression or any other method that will extract this data.

** More about xpath and lxml can be found here.

Next, we would like to perform the login phase.In this phase, we send a POST request to the login url. We use the payload that we created in the previous step as the data.We also use a header for the request and add a referer key to it for the same url.

Now, that we were able to successfully login, we will perform the actual scraping from bitbucket dashboard page

In order to test this, let’s scrape the list of projects from the bitbucket dashboard page.Again, we will use xpath to find the target elements and print out the results. If everything went OK, the output should be the list of buckets / project that are in your bitbucket account.

You can also validate the requests results by checking the returned status code from each request.It won’t always let you know that the login phase was successful but it can be used as an indicator.

Web Scraping With Python Pdf

for example:

That’s it.

Python Web Scraping Tutorial

Full code sample can be found on Github.